前言

- 本篇為 Background Matting: The World is Your Green Screen (BGM) 的第二版,沒看過可先參考我之前寫的文章。

- 此篇針對速度和畫質進行改良,提出了兩個 network,分別是 base network 和 refinement network,並且提出了新的 Dataset, VideoMatte240K 和 PhotoMatte13K/85。

- 關於這篇針對 Segmentation 的部分採用了 DeepLabV3 和 DeepLabV3+ 裡面的 Atrous Spatial Pyramid Pooling (ASPP) module 加入到 network 裡面,進而產生 coarse boundaries,並參考 PointRend 裡面的概念,其透過學習 refinement-region 得到更精細的 high-quality and high-resolutions 的 segmentation,且使用非常少的 memory 和 computation power。

方法

- 由於在 high resolution 的影像上做 matting 其實是非常 computation and memory consumption,但其實大部分的地方不需要處理到太細節,除了像是頭髮或 person’s outline 的地方,因此作者認為特別去設計一個 network 去處理 high resolutions image 太浪費資源,提出了兩個 network 處理這個部分,其中一個處理 low-resolution images,另一個處理一些原始影像大小的 selected patches based on the prediction of the previous network。

Base Network

- input: Source Image(I), Background(B).

- output: alpha matte(α_c), lower resolution foreground(F-R_c), error prediction map(E_c), 32-channgel hidden feature H_c.

- Our base network consists of three modules:Backbone, ASPP, and Decoder

Given an image I and the captured background B we predict the alpha matte α and the foreground F, which will allow us to composite over any new background by I’ = αF + (1−α)B’, where B’ is the new background.

- 1. Backbone

- ResNet-50(main), which can be replaced by ResNet-101 and MobileNetV2 to trade-off between speed and quality.

- 2. ASPP module

consists of multiple dilated convolution filters with different dilation rates of 3,6 and 9.

- 3. Decoder

- 直接看圖比較快,詳細如下原文

Our decoder network applies bilinear upsampling at each step, concatenated with the skip connection from the backbone, and followed by a 3×3 convolution, Batch Normalization, and ReLU activation (except the last layer). The decoder network outputs coarse-grained alpha matte α_c, foreground residual F-R_c, error prediction map E_c and a 32-channel hidden features H_c. The hidden features H_c contain global contexts that will be useful for the refinement network.

Refinement Network

- input: Base Network + I + B

- output: high-resolution output of alpha matte(α) and foreground residual(F) only at select regions.

the refinement network operates only on patches selected based on the error prediction map E_c.

- 其中 E_c 表示大小為原始影像的 1/c 大小的 error prediction map,因此假設 c = 4, E_c = E_4, 則會對應到原始影像 4x4 大小的 patch,而作者選擇了 top k 個最高的 predicted error 值的位置進行 refine,因此總共會在原始影像上 refine 16k 的 pixels。

- 這邊使用 two-stage 的方式,分別使用在 1/2 resolution 和 full resolution 的地方,而在 inference 的時候要 refine 幾個(k) patch 可以直接進行設定,k 越大品質越好速度越慢。

- First stage:

透過 bilinearly resample 成 1/2 大小的 (I, B, α_c, F-R_c, E_c, H_c),而這時候因為解析度又少了一半所以透過 error prediction map E_4 所找出來的 patches 大小會變為 8x8,此時再經過兩個沒有 padding 的 3x3 con, BN, ReLU 讓維度變成 4x4。 - Second stage:

將剛剛的 upsample to 8x8,跟原始大小 (I, B) 的相同地方的 8x8 做 concatenate,再做兩個沒有 padding 的 3x3 con, BN, ReLU 讓維度變成 4x4,但這次的最後一層並沒有做 ReLU,最後會得到 4x4 alpha matte α’_c, foreground residual F’-R_c,然後替換掉相同位置大小的 Coarse Output 的 α_c, F-R_c,最後輸出 α, F 來區分前後景。

Instead of solving for the foreground directly, we solve for foreground residual F_R = F − I. Then, F can be recovered by adding F_R to the input image I with suitable clamping: F = max(min(FR + I, 1), 0). We find this formulation improves learning, and allows us to apply a low resolution foreground residual onto a high-resolution input image through upsampling, aiding our architecture as described later.

- 整體架構如下,有 "*" 表示 ground truth。

資料集

- Adobe Image Matting(AIM): 269 human training samples, 11 test samples, 1000x1000 resolution.

- Distinctions646: 362 training samples, 11 test samples, 1700x2000 resolution.

- VideoMatte240K: 479 training videos, 5 test videos, 384 videos are 4K resolution, 100 videos are in HD.

484 high-resolution green screen videos and generate a total of 240,709 unique frames of alpha mattes and foregrounds with chroma-key software Adobe After Effects. 384 videos are at 4K resolution and 100 are in HD. We split the videos by 479 : 5 to form the train and validation sets.

- PhotoMatte13K/85: 13165 training samples, 500 test samples, 2000x2500 resolution.

13,665 images shot with studio-quality lighting and cameras in front of a green-screen, along with mattes extracted via chroma-key algorithms with manual tuning and error repair. We split the images by 13,165 : 500 to form the train and validation sets. These mattes contain a narrow range of poses but are high resolution, averaging around 2000×2500, and include details such as individual strands of hair. However privacy and licensing issues prevent us from sharing this set; thus, we also collected an additional set of 85 mattes of similar quality for use as a test set, which we are releasing to the public as PhotoMatte85.

We crawl 8861 high-resolution background images from Flickr and Google and split them by 8636 : 200 : 25 to use when constructing the train, validation, and test sets. We will release the test set in which all images have a CC license (see appendix for details).

訓練方式

- 所有的資料集都還有 alpha matte, foreground layer,作者針對 foreground 和 background layer 獨立做許多 data augmentation 像是 affine transformation, horizontal flipping, brightness, hue, and saturation adjustment, blurring, sharpening, and random noise.

- 其他 augmentation 細節如下

We also slightly translate the background to simulate misalignment and create artificial shadows to simulate how the presence of a subject can cast shadows in real-life environments (see appendixfor more details). We randomly crop the images in every minibatch so that the height and width are each uniformly distributed between 1024 and 2048 to support inference at any resolution and aspect ratio.

- 先訓練 base network 到收斂才 jointly train base network 和 refinement network,透過不同 Dataset 的方式進行區分,且訊順序如下。

- VideoMatte240K: base + (base + refine)

- PhotoMatte13K: (base + refine)

- Distinctions-646: (base + refine)

- AIM: 不使用,用了效果變差

實驗結果

Evaluation on composition datasets

- 雖然說有小輸 FBA 一點,但是 FBA 需要輸入自行標註的 high quality trimaps 才有辦法達到這個 performance,且其速度也相對慢。

Evaluation on captured data

- 最近很流行這種比較 real data 的實驗,但為了公平比較所以在 real data 的時候 trimap 是不能手繪的,因此作者使用 DeepLabV3+ 的輸出作為 FBA 的 trimap,效果如下的 FBA_auto。

- 此外也做了一些 real data 的 user study

Performance comparison

- Gmac 是一種與運算單元有關的計算時間方式,詳細我也不清楚,應該是越小越好,但至少可以看到 FPS 的部分此篇論文是快很多的。

- 另外也有比較 model 的參數量和大小

Practical use

- 使用方式和第一版一樣需要先拍一張純背景的影像再進行實際應用,作者也有比較在實際綠背的情況下效果如何。

Ablation Studies

Role of our datasets

- 作者表示就算多 train AIM 然後 test 在 AIM 上效果也會變差,很有可能是因為 AIM 解析度和品質相對其他 dataset 較差,所以可能導致 overfitting

Role of the base network

- Backbone 的使用上雖然說 ResNet-50 在某些 metric 略輸 ResNet-101,但其實已經足夠好了,而是否選擇 MobileNet 就是 time 和 performance 的 trade-off,1x1 的部分是做 Point-based 的嘗試,輸出只會有 2x2 receptive,而 3x3 有 13x13 的 receptive 結果較好。

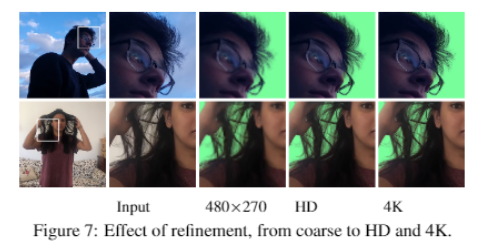

Role of the refinement network

- 針對不同解析度看結果

- 觀察 refinement area 影響比例和 metric 的結果,5%~10% 的部分進步最多。

- k 是指從 error prediction map 選擇多少個 patch。

問題討論

- 使用方式、限制和問題點基本上和第一版差不多,此篇主要是針對速度和解析度進行優化

其他參考連結

[BGMv2]